Image classification consists in extracting added-value information from images. Such processing methods

classify pixels within images into geographical connected zones with similar properties, and identified by a

common class label. The classification can be either unsupervised or supervised.

Unsupervised classification does not require any additional information about the properties of the input

image to classify it. On the contrary, supervised methods need a preliminary learning to be computed over

training datasets having similar properties than the image to classify, in order to build a classification

model.

The OTB classification is implemented as a generic Machine Learning framework, supporting several

possible machine learning libraries as backends. The base class otb::MachineLearningModel defines

this framework. As of now libSVM (the machine learning library historically integrated in

OTB), machine learning methods of OpenCV library ([14]) and also Shark machine learning

library ([67]) are available. Both supervised and unsupervised classifiers are supported in the

framework.

The current list of classifiers available through the same generic interface within the OTB is:

- LibSVM: Support Vector Machines classifier based on libSVM.

- SVM: Support Vector Machines classifier based on OpenCV, itself based on libSVM.

- Bayes: Normal Bayes classifier based on OpenCV.

- Boost: Boost classifier based on OpenCV.

- DT: Decision Tree classifier based on OpenCV.

- RF: Random Forests classifier based on the Random Trees in OpenCV.

- GBT: Gradient Boosted Tree classifier based on OpenCV (removed in version 3).

- KNN: K-Nearest Neighbors classifier based on OpenCV.

- ANN: Artificial Neural Network classifier based on OpenCV.

- SharkRF : Random Forests classifier based on Shark.

- SharkKM : KMeans unsupervised classifier based on Shark.

These models have a common interface, with the following major functions:

- SetInputListSample(InputListSampleType ⋆in) : set the list of input samples

- SetTargetListSample(TargetListSampleType ⋆in) : set the list of target samples

- Train() : train the model based on input samples

- Save(...) : saves the model to file

- Load(...) : load a model from file

- Predict(...) : predict a target value for an input sample

- PredictBatch(...) : prediction on a list of input samples

The PredictBatch(...) function can be multi-threaded when called either from a multi-threaded filter, or

from a single location. In the later case, it creates several threads using OpenMP. There is a factory

mechanism on top of the model class (see otb::MachineLearningModelFactory ). Given an input file,

the static function CreateMachineLearningModel(...) is able to instantiate a model of the right

type.

For unsupervised models, the target samples still have to be set. They won’t be used so you can fill a

ListSample with zeros.

The models are trained from a list of input samples, stored in a itk::Statistics::ListSample

. For supervised classifiers, they also need a list of targets associated to each input sample.

Whatever the source of samples, it has to be converted into a ListSample before being fed into the

model.

Then, model-specific parameters can be set. And finally, the Train() method starts the learning step. Once

the model is trained it can be saved to file using the function Save(). The following examples show how to

do that.

The source code for this example can be found in the file

Examples/Learning/TrainMachineLearningModelFromSamplesExample.cxx.

This example illustrates the use of the otb::SVMMachineLearningModel class, which inherits from the

otb::MachineLearningModel class. This class allows the estimation of a classification model (supervised

learning) from samples. In this example, we will train an SVM model with 4 output classes, from 1000

randomly generated training samples, each of them having 7 components. We start by including the

appropriate header files.

// List sample generator #include "otbListSampleGenerator.h" // Random number generator

// SVM model Estimator #include "otbSVMMachineLearningModel.h"

The input parameters of the sample generator and of the SVM classifier are initialized.

int nbSamples = 1000; int nbSampleComponents = 7; int nbClasses = 4;

Two lists are generated into a itk::Statistics::ListSample which is the structure used

to handle both lists of samples and of labels for the machine learning classes derived from

otb::MachineLearningModel . The first list is composed of feature vectors representing multi-component

samples, and the second one is filled with their corresponding class labels. The list of labels is composed of

scalar values.

// Input related typedefs typedef float InputValueType;

typedef itk::VariableLengthVector<InputValueType> InputSampleType;

typedef itk::Statistics::ListSample<InputSampleType> InputListSampleType; // Target related typedefs

typedef int TargetValueType; typedef itk::FixedArray<TargetValueType, 1> TargetSampleType;

typedef itk::Statistics::ListSample<TargetSampleType> TargetListSampleType;

InputListSampleType::Pointer InputListSample = InputListSampleType::New();

TargetListSampleType::Pointer TargetListSample = TargetListSampleType::New();

InputListSample->SetMeasurementVectorSize(nbSampleComponents);

In this example, the list of multi-component training samples is randomly filled with a random number

generator based on the itk::Statistics::MersenneTwisterRandomVariateGenerator class. Each

component’s value is generated from a normal law centered around the corresponding class label of each

sample multiplied by 100, with a standard deviation of 10.

itk::Statistics::MersenneTwisterRandomVariateGenerator::Pointer randGen;

randGen = itk::Statistics::MersenneTwisterRandomVariateGenerator::GetInstance();

// Filling the two input training lists for (int i = 0; i < nbSamples; ++i)

{ InputSampleType sample; TargetValueType label = (i % nbClasses) + 1;

// Multi-component sample randomly filled from a normal law for each component

sample.SetSize(nbSampleComponents); for (int itComp = 0; itComp < nbSampleComponents; ++itComp)

{ sample[itComp] = randGen->GetNormalVariate(100 ⋆ label, 10); }

InputListSample->PushBack(sample); TargetListSample->PushBack(label); }

Once both sample and label lists are generated, the second step consists in declaring the machine learning

classifier. In our case we use an SVM model with the help of the otb::SVMMachineLearningModel class

which is derived from the otb::MachineLearningModel class. This pure virtual class is based on the

machine learning framework of the OpenCV library ([14]) which handles other classifiers than the SVM.

typedef otb::SVMMachineLearningModel<InputValueType, TargetValueType> SVMType;

SVMType::Pointer SVMClassifier = SVMType::New(); SVMClassifier->SetInputListSample(InputListSample);

SVMClassifier->SetTargetListSample(TargetListSample); SVMClassifier->SetKernelType(CvSVM::LINEAR);

Once the classifier is parametrized with both input lists and default parameters, except for the kernel type in

our example of SVM model estimation, the model training is computed with the Train method. Finally, the

Save method exports the model to a text file. All the available classifiers based on OpenCV are

implemented with these interfaces. Like for the SVM model training, the other classifiers can be

parametrized with specific settings.

SVMClassifier->Train(); SVMClassifier->Save(outputModelFileName);

The source code for this example can be found in the file

Examples/Learning/TrainMachineLearningModelFromImagesExample.cxx.

This example illustrates the use of the otb::MachineLearningModel class. This class allows the

estimation of a classification model (supervised learning) from images. In this example, we will train an

SVM with 4 classes. We start by including the appropriate header files.

// List sample generator #include "otbListSampleGenerator.h"

// Extract a ROI of the vectordata #include "otbVectorDataIntoImageProjectionFilter.h"

// SVM model Estimator #include "otbSVMMachineLearningModel.h"

In this framework, we must transform the input samples store in a vector data into a

itk::Statistics::ListSample which is the structure compatible with the machine learning classes. On

the one hand, we are using feature vectors for the characterization of the classes, and on the other hand, the

class labels are scalar values. We first re-project the input vector data over the input image, using the

otb::VectorDataIntoImageProjectionFilter class. To convert the input samples store in a vector

data into a itk::Statistics::ListSample , we use the otb::ListSampleGenerator

class.

// VectorData projection filter typedef otb::VectorDataIntoImageProjectionFilter<VectorDataType, InputImageType>

VectorDataReprojectionType;

InputReaderType::Pointer inputReader = InputReaderType::New(); inputReader->SetFileName(inputImageFileName);

InputImageType::Pointer image = inputReader->GetOutput(); image->UpdateOutputInformation();

// Read the Vectordata VectorDataReaderType::Pointer vectorReader = VectorDataReaderType::New();

vectorReader->SetFileName(trainingShpFileName); vectorReader->Update();

VectorDataType::Pointer vectorData = vectorReader->GetOutput(); vectorData->Update();

VectorDataReprojectionType::Pointer vdreproj = VectorDataReprojectionType::New();

vdreproj->SetInputImage(image); vdreproj->SetInput(vectorData);

vdreproj->SetUseOutputSpacingAndOriginFromImage(false); vdreproj->Update();

typedef otb::ListSampleGenerator<InputImageType, VectorDataType>

ListSampleGeneratorType;

ListSampleGeneratorType::Pointer sampleGenerator; sampleGenerator = ListSampleGeneratorType::New();

sampleGenerator->SetInput(image); sampleGenerator->SetInputVectorData(vdreproj->GetOutput());

sampleGenerator->SetClassKey("Class"); sampleGenerator->Update();

Now, we need to declare the machine learning model which will be used by the classifier. In this example,

we train an SVM model. The otb::SVMMachineLearningModel class inherits from the pure virtual class

otb::MachineLearningModel which is templated over the type of values used for the measures and the

type of pixels used for the labels. Most of the classification and regression algorithms available through this

interface in OTB is based on the OpenCV library [14]. Specific methods can be used to set classifier

parameters. In the case of SVM, we set here the type of the kernel. Other parameters are let with their

default values.

typedef otb::SVMMachineLearningModel <InputImageType::InternalPixelType,

ListSampleGeneratorType::ClassLabelType> SVMType;

SVMType::Pointer SVMClassifier = SVMType::New();

SVMClassifier->SetInputListSample(sampleGenerator->GetTrainingListSample());

SVMClassifier->SetTargetListSample(sampleGenerator->GetTrainingListLabel());

SVMClassifier->SetKernelType(CvSVM::LINEAR);

The machine learning interface is generic and gives access to other classifiers. We now train the SVM

model using the Train and save the model to a text file using the Save method.

SVMClassifier->Train(); SVMClassifier->Save(outputModelFileName);

You can now use the Predict method which takes a itk::Statistics::ListSample as input and

estimates the label of each input sample using the model. Finally, the otb::ImageClassificationModel

inherits from the itk::ImageToImageFilter and allows classifying pixels in the input image by

predicting their labels using a model.

For the prediction step, the usual process is to:

- Load an existing model from a file.

- Convert the data to predict into a ListSample.

- Run the PredictBatch(...) function.

There is an image filter that perform this step on a whole image, supporting streaming and multi-threading:

otb::ImageClassificationFilter .

The source code for this example can be found in the file

Examples/Classification/SupervisedImageClassificationExample.cxx.

In OTB, a generic streamed filter called otb::ImageClassificationFilter is available to classify any

input multi-channel image according to an input classification model file. This filter is generic because it

works with any classification model type (SVM, KNN, Artificial Neural Network,...) generated within the

OTB generic Machine Learning framework based on OpenCV ([14]). The input model file is smartly parsed

according to its content in order to identify which learning method was used to generate it. Once the

classification method and model are known, the input image can be classified. More details are

given in subsections ?? and ?? to generate a classification model either from samples or from

images. In this example we will illustrate its use. We start by including the appropriate header

files.

#include "otbMachineLearningModelFactory.h" #include "otbImageClassificationFilter.h"

We will assume double precision input images and will also define the type for the labeled

pixels.

const unsigned int Dimension = 2; typedef double PixelType;

typedef unsigned short LabeledPixelType;

Our classifier is generic enough to be able to process images with any number of bands. We read the input

image as a otb::VectorImage . The labeled image will be a scalar image.

typedef otb::VectorImage<PixelType, Dimension> ImageType;

typedef otb::Image<LabeledPixelType, Dimension> LabeledImageType;

We can now define the type for the classifier filter, which is templated over its input and output image

types.

typedef otb::ImageClassificationFilter<ImageType, LabeledImageType>

ClassificationFilterType;

typedef ClassificationFilterType::ModelType ModelType;

Moreover, it is necessary to define a otb::MachineLearningModelFactory which is templated over its

input and output pixel types. This factory is used to parse the input model file and to define which

classification method to use.

typedef otb::MachineLearningModelFactory<PixelType, LabeledPixelType>

MachineLearningModelFactoryType;

And finally, we define the reader and the writer. Since the images to classify can be very big, we will use a

streamed writer which will trigger the streaming ability of the classifier.

typedef otb::ImageFileReader<ImageType> ReaderType;

typedef otb::ImageFileWriter<LabeledImageType> WriterType;

We instantiate the classifier and the reader objects and we set the existing model obtained in a previous

training step.

ClassificationFilterType::Pointer filter = ClassificationFilterType::New();

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(infname);

The input model file is parsed according to its content and the generated model is then loaded within the

otb::ImageClassificationFilter .

ModelType::Pointer model; model = MachineLearningModelFactoryType::CreateMachineLearningModel(

modelfname,

MachineLearningModelFactoryType::ReadMode);

model->Load(modelfname); filter->SetModel(model);

We plug the pipeline and trigger its execution by updating the output of the writer.

filter->SetInput(reader->GetOutput()); WriterType::Pointer writer = WriterType::New();

writer->SetInput(filter->GetOutput()); writer->SetFileName(outfname); writer->Update();

The classifiers are integrated in several OTB Applications. There is a base class that provides an easy access

to all the classifiers: otb::Wrapper::LearningApplicationBase . As each machine learning model has

a specific set of parameters, the base class LearningApplicationBase knows how to expose each type of

classifier with its dedicated parameters (a task that is a bit tedious so we want to implement it only once).

The DoInit() method creates a choice parameter named classifier which contains the different

supported classifiers along with their parameters.

The function Train(...) provide an easy way to train the selected classifier, with the corresponding

parameters, and save the model to file.

On the other hand, the function Classify(...) allows to load a model from file and apply it on a list of

samples.

Kernel based learning methods in general and the Support Vector Machines (SVM) in particular, have been

introduced in the last years in learning theory for classification and regression tasks, [132]. SVM have

been successfully applied to text categorization, [74], and face recognition, [102]. Recently,

they have been successfully used for the classification of hyperspectral remote-sensing images,

[15].

Simply stated, the approach consists in searching for the separating surface between 2 classes by the

determination of the subset of training samples which best describes the boundary between the 2 classes.

These samples are called support vectors and completely define the classification system. In the case where

the two classes are nonlinearly separable, the method uses a kernel expansion in order to make projections

of the feature space onto higher dimensionality spaces where the separation of the classes becomes

linear.

This subsection reminds the basic principles of SVM learning and classification. A good tutorial on SVM

can be found in, [17].

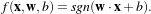

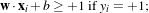

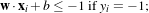

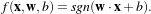

We have N samples represented by the couple (yi,xi),i = 1…N where yi ∈{-1,+1} is the class label and

xi ∈ℝn is the feature vector of dimension n. A classifier is a function

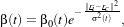

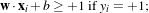

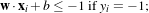

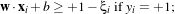

where

α are the classifier parameters. The SVM finds the optimal separating hyperplane which fulfills the

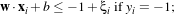

following constraints :

where

α are the classifier parameters. The SVM finds the optimal separating hyperplane which fulfills the

following constraints :

- The samples with labels +1 and -1 are on different sides of the hyperplane.

- The distance of the closest vectors to the hyperplane is maximised. These are the support

vectors (SV) and this distance is called the margin.

The separating hyperplane has the equation

with

w being its normal vector and x being any point of the hyperplane. The orthogonal distance to

the origin is given by

with

w being its normal vector and x being any point of the hyperplane. The orthogonal distance to

the origin is given by  . Vectors located outside the hyperplane have either w ⋅x +b > 0 or

w ⋅x +b < 0.

. Vectors located outside the hyperplane have either w ⋅x +b > 0 or

w ⋅x +b < 0.

Therefore, the classifier function can be written as

The SVs are placed on two hyperplanes which are parallel to the optimal separating one. In order to find the

optimal hyperplane, one sets w and b :

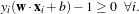

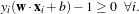

Since there must not be any vector inside the margin, the following constraint can be used:

which can be rewritten as

which can be rewritten as

The orthogonal distances of the 2 parallel hyperplanes to the origin are  and

and  . Therefore the

modulus of the margin is equal to

. Therefore the

modulus of the margin is equal to  and it has to be maximised.

and it has to be maximised.

Thus, the problem to be solved is:

- Find w and b which minimise

- under the constraint : yi(w ⋅xi+b) ≥ 1 i = 1…N.

This problem can be solved by using the Lagrange multipliers with one multiplier per sample. It can be

shown that only the support vectors will have a positive Lagrange multiplier.

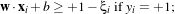

In the case where the two classes are not exactly linearly separable, one can modify the constraints above by

using

If ξi > 1, one considers that the sample is wrong. The function which has then to be minimised is

∥w∥2 +C

∥w∥2 +C ;, where C is a tolerance parameter. The optimisation problem is the same than in the

linear case, but one multiplier has to be added for each new constraint ξi ≥ 0.

;, where C is a tolerance parameter. The optimisation problem is the same than in the

linear case, but one multiplier has to be added for each new constraint ξi ≥ 0.

If the decision surface needs to be non-linear, this solution cannot be applied and the kernel approach has to

be adopted.

One drawback of the SVM is that, in their basic version, they can only solve two-class problems. Some

works exist in the field of multi-class SVM (see [4, 136], and the comparison made by [59]), but they are

not used in our system.

You have to be aware that to achieve better convergence of the algorithm it is strongly advised to normalize

feature vector components in the [-1;1] interval.

For problems with N > 2 classes, one can choose either to train N SVM (one class against all the others), or

to train N ×(N -1) SVM (one class against each of the others). In the second approach, which is the one

that we use, the final decision is taken by choosing the class which is most often selected by the whole set of

SVM.

The Random Forests algorithm is also available in OTB machine learning framework. This model builds a

set of decision trees. Each tree may not give a reliable prediction, but taking them together, they form

a robust classifier. The prediction of this model is the mode of the predictions of individual

trees.

There are two implementations: one in OpenCV and the other on in Shark. The Shark implementation has a

noteworthy advantage: the training step is parallel. It uses the following parameters:

- The number of trees to train

- The number of random attributes to investigate at each node

- The maximum node size to decide a split

- The ratio of the original training dataset to use as the out of bag sample

Except these specific parameter, its usage is exactly the same as the other machine learning models (such as

the SVM model).

OTB has developed a specific interface for user-defined kernels. However, the following functions use a

deprecated OTB interface. The code source for these Generic Kernels has been removed from the official

repository. It is now available as a remote module: GKSVM.

A function k(⋅,⋅) is considered to be a kernel when:

∀g(⋅) ∈ 2(Rn) 2(Rn) | so that ∫

g(x)2dx be finite, | (19.1)

|

| then ∫

k(x,y)g(x)g(y)dxdy ≥ 0, | | |

which is known as the Mercer condition.

When defined through the OTB, a kernel is a class that inherits from GenericKernelFunctorBase. Several

virtual functions have to be overloaded:

- The Evaluate function, which implements the behavior of the kernel itself. For instance, the

classical linear kernel could be re-implemented with:

double

MyOwnNewKernel

::Evaluate ( const svm_node ⋆ x, const svm_node ⋆ y,

const svm_parameter & param ) const

{

return this->dot(x,y);

}

This simple example shows that the classical dot product is already implemented into

otb::GenericKernelFunctorBase::dot() as a protected function.

- The Update() function which synchronizes local variables and their integration into the initial SVM

procedure. The following examples will show the way to use it.

Some pre-defined generic kernels have already been implemented in OTB:

- otb::MixturePolyRBFKernelFunctor which implements a linear mixture of a polynomial

and a RBF kernel;

- otb::NonGaussianRBFKernelFunctor which implements a non gaussian RBF kernel;

- otb::SpectralAngleKernelFunctor, a kernel that integrates the Spectral Angle, instead of

the Euclidean distance, into an inverse multiquadric kernel. This kernel may be appropriated

when using multispectral data.

- otb::ChangeProfileKernelFunctor, a kernel which is dedicated to the supervized

classification of the multiscale change profile presented in section 21.5.1.

The source code for this example can be found in the file

Examples/Learning/SVMGenericKernelImageModelEstimatorExample.cxx.

This example illustrates the modifications to be added to the use of otb::SVMImageModelEstimator in

order to add a user defined kernel. This initial program has been explained in section ??.

The first thing to do is to include the header file for the new kernel.

#include "otbSVMImageModelEstimator.h" #include "otbMixturePolyRBFKernelFunctor.h"

Once the otb::SVMImageModelEstimator is instantiated, it is possible to add the new kernel and its

parameters.

Then in addition to the initial code:

EstimatorType::Pointer svmEstimator = EstimatorType::New();

svmEstimator->SetSVMType(C_SVC); svmEstimator->SetInputImage(inputReader->GetOutput());

svmEstimator->SetTrainingImage(trainingReader->GetOutput());

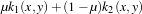

The instantiation of the kernel is to be implemented. The kernel which is used here is a linear combination

of a polynomial kernel and an RBF one. It is written as

with

k1(x,y) =

with

k1(x,y) =  d and k2(x,y) = exp

d and k2(x,y) = exp . Then, the specific parameters of this kernel

are:

. Then, the specific parameters of this kernel

are:

- Mixture (μ),

- GammaPoly (γ1),

- CoefPoly (c0),

- DegreePoly (d),

- GammaRBF (γ2).

Their instantiations are achieved through the use of the SetValue function.

otb::MixturePolyRBFKernelFunctor myKernel; myKernel.SetValue("Mixture", 0.5);

myKernel.SetValue("GammaPoly", 1.0); myKernel.SetValue("CoefPoly", 0.0);

myKernel.SetValue("DegreePoly", 1); myKernel.SetValue("GammaRBF", 1.5); myKernel.Update();

Once the kernel’s parameters are affected and the kernel updated, the connection to

otb::SVMImageModelEstimator takes place here.

svmEstimator->SetKernelFunctor(&myKernel); svmEstimator->SetKernelType(GENERIC);

The model estimation procedure is triggered by calling the estimator’s Update method.

The rest of the code remains unchanged...

svmEstimator->SaveModel(outputModelFileName);

In the file outputModelFileName a specific line will appear when using a generic kernel. It gives the name

of the kernel and its parameters name and value.

The source code for this example can be found in the file

Examples/Learning/SVMGenericKernelImageClassificationExample.cxx.

This example illustrates the modifications to be added to use the otb::SVMClassifier class for

performing SVM classification on images with a user-defined kernel. In this example, we will use an SVM

model estimated in the previous section to separate between water and non-water pixels by using the RGB

values only. The first thing to do is include the header file for the class as well as the header of the

appropriated kernel to be used.

#include "otbSVMClassifier.h" #include "otbMixturePolyRBFKernelFunctor.h"

We need to declare the SVM model which is to be used by the classifier. The SVM model is templated over

the type of value used for the measures and the type of pixel used for the labels.

typedef otb::SVMModel<PixelType, LabelPixelType> ModelType; ModelType::Pointer model = ModelType::New();

After instantiation, we can load a model saved to a file (see section ?? for an example of model estimation

and storage to a file).

When using a user defined kernel, an explicit instantiation has to be performed.

otb::MixturePolyRBFKernelFunctor myKernel; model->SetKernelFunctor(&myKernel);

Then, the rest of the classification program remains unchanged.

model->LoadModel(modelFilename);

The KMeans algorithm has been implemented in Shark library, and has been wrapped in the OTB machine

learning framework. It is the first unsupervised algorithm in this framework. It can be used in the same way

as other machine learning models. Remember that even if unsupervised model don’t use a label information

on the samples, the target ListSample still has to be set in MachineLearningModel. A ListSample filled

with zeros can be used.

This model uses a hard clustering model with the following parameters:

- The maximum number of iterations

- The number of centroids (K)

- An option to normalize input samples

As with Shark Random Forests, the training step is parallel.

The source code for this example can be found in the file

Examples/Classification/ScalarImageKmeansClassifier.cxx.

This example shows how to use the KMeans model for classifying the pixel of a scalar image.

The itk::Statistics::ScalarImageKmeansImageFilter is used for taking a scalar image and

applying the K-Means algorithm in order to define classes that represents statistical distributions of

intensity values in the pixels. The classes are then used in this filter for generating a labeled image where

every pixel is assigned to one of the classes.

#include "otbImage.h" #include "otbImageFileReader.h" #include "otbImageFileWriter.h"

#include "itkScalarImageKmeansImageFilter.h"

First we define the pixel type and dimension of the image that we intend to classify. With this image type

we can also declare the otb::ImageFileReader needed for reading the input image, create one and set

its input filename.

typedef signed short PixelType; const unsigned int Dimension = 2;

typedef otb::Image<PixelType, Dimension> ImageType; typedef otb::ImageFileReader<ImageType> ReaderType;

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(inputImageFileName);

With the ImageType we instantiate the type of the itk::ScalarImageKmeansImageFilter that will

compute the K-Means model and then classify the image pixels.

typedef itk::ScalarImageKmeansImageFilter<ImageType> KMeansFilterType;

KMeansFilterType::Pointer kmeansFilter = KMeansFilterType::New();

kmeansFilter->SetInput(reader->GetOutput()); const unsigned int numberOfInitialClasses = atoi(argv[4]);

In general the classification will produce as output an image whose pixel values are integers associated to

the labels of the classes. Since typically these integers will be generated in order (0, 1, 2, ...N), the output

image will tend to look very dark when displayed with naive viewers. It is therefore convenient

to have the option of spreading the label values over the dynamic range of the output image

pixel type. When this is done, the dynamic range of the pixels is divided by the number of

classes in order to define the increment between labels. For example, an output image of 8

bits will have a dynamic range of [0:255], and when it is used for holding four classes, the

non-contiguous labels will be (0, 64, 128, 192). The selection of the mode to use is done with the method

SetUseContiguousLabels().

const unsigned int useNonContiguousLabels = atoi(argv[3]);

kmeansFilter->SetUseNonContiguousLabels(useNonContiguousLabels);

For each one of the classes we must provide a tentative initial value for the mean of the class. Given that this

is a scalar image, each one of the means is simply a scalar value. Note however that in a general case of

K-Means, the input image would be a vector image and therefore the means will be vectors of the same

dimension as the image pixels.

for (unsigned k = 0; k < numberOfInitialClasses; ++k) {

const double userProvidedInitialMean = atof(argv[k + argoffset]);

kmeansFilter->AddClassWithInitialMean(userProvidedInitialMean); }

The itk::ScalarImageKmeansImageFilter is predefined for producing an 8 bits scalar image as

output. This output image contains labels associated to each one of the classes in the K-Means

algorithm. In the following lines we use the OutputImageType in order to instantiate the type of a

otb::ImageFileWriter . Then create one, and connect it to the output of the classification

filter.

typedef KMeansFilterType::OutputImageType OutputImageType;

typedef otb::ImageFileWriter<OutputImageType> WriterType; WriterType::Pointer writer = WriterType::New();

writer->SetInput(kmeansFilter->GetOutput()); writer->SetFileName(outputImageFileName);

We are now ready for triggering the execution of the pipeline. This is done by simply invoking the

Update() method in the writer. This call will propagate the update request to the reader and then to the

classifier.

try { writer->Update(); } catch (itk::ExceptionObject& excp) {

std::cerr << "Problem encountered while writing "; std::cerr << " image file : " << argv[2] << std::endl;

std::cerr << excp << std::endl; return EXIT_FAILURE; }

At this point the classification is done, the labeled image is saved in a file, and we can take a look at

the means that were found as a result of the model estimation performed inside the classifier

filter.

KMeansFilterType::ParametersType estimatedMeans = kmeansFilter->GetFinalMeans();

const unsigned int numberOfClasses = estimatedMeans.Size();

for (unsigned int i = 0; i < numberOfClasses; ++i) { std::cout << "cluster[" << i << "] ";

std::cout << " estimated mean : " << estimatedMeans[i] << std::endl; }

Figure 19.1 illustrates the effect of this filter with three classes. The means can be estimated by

ScalarImageKmeansModelEstimator.cxx.

The source code for this example can be found in the file

Examples/Classification/ScalarImageKmeansModelEstimator.cxx.

This example shows how to compute the KMeans model of an Scalar Image.

The itk::Statistics::KdTreeBasedKmeansEstimator is used for taking a scalar image and applying

the K-Means algorithm in order to define classes that represents statistical distributions of intensity values

in the pixels. One of the drawbacks of this technique is that the spatial distribution of the pixels is not

considered at all. It is common therefore to combine the classification resulting from K-Means with other

segmentation techniques that will use the classification as a prior and add spatial information to it in order

to produce a better segmentation.

// Create a List from the scalar image typedef itk::Statistics::ImageToListSampleAdaptor<ImageType> AdaptorType;

AdaptorType::Pointer adaptor = AdaptorType::New(); adaptor->SetImage(reader->GetOutput());

// Define the Measurement vector type from the AdaptorType // Create the K-d tree structure

typedef itk::Statistics::WeightedCentroidKdTreeGenerator< AdaptorType>

TreeGeneratorType; TreeGeneratorType::Pointer treeGenerator = TreeGeneratorType::New();

treeGenerator->SetSample(adaptor); treeGenerator->SetBucketSize(16); treeGenerator->Update();

typedef TreeGeneratorType::KdTreeType TreeType;

typedef itk::Statistics::KdTreeBasedKmeansEstimator<TreeType> EstimatorType;

EstimatorType::Pointer estimator = EstimatorType::New(); const unsigned int numberOfClasses = 4;

EstimatorType::ParametersType initialMeans(numberOfClasses); initialMeans[0] = 25.0;

initialMeans[1] = 125.0; initialMeans[2] = 250.0; estimator->SetParameters(initialMeans);

estimator->SetKdTree(treeGenerator->GetOutput()); estimator->SetMaximumIteration(200);

estimator->SetCentroidPositionChangesThreshold(0.0); estimator->StartOptimization();

EstimatorType::ParametersType estimatedMeans = estimator->GetParameters();

for (unsigned int i = 0; i < numberOfClasses; ++i) { std::cout << "cluster[" << i << "] " << std::endl;

std::cout << " estimated mean : " << estimatedMeans[i] << std::endl; }

The source code for this example can be found in the file

Examples/Classification/KMeansImageClassificationExample.cxx.

The K-Means classification proposed by ITK for images is limited to scalar images and is not streamed. In

this example, we show how the use of the otb::KMeansImageClassificationFilter allows for a

simple implementation of a K-Means classification application. We will start by including the appropirate

header file.

#include "otbKMeansImageClassificationFilter.h"

We will assume double precision input images and will also define the type for the labeled

pixels.

const unsigned int Dimension = 2; typedef double PixelType;

typedef unsigned short LabeledPixelType;

Our classifier will be generic enough to be able to process images with any number of bands. We read the

images as otb::VectorImage s. The labeled image will be a scalar image.

typedef otb::VectorImage<PixelType, Dimension> ImageType;

typedef otb::Image<LabeledPixelType, Dimension> LabeledImageType;

We can now define the type for the classifier filter, which is templated over its input and output image

types.

typedef otb::KMeansImageClassificationFilter<ImageType, LabeledImageType> ClassificationFilterType;

typedef ClassificationFilterType::KMeansParametersType KMeansParametersType;

And finally, we define the reader and the writer. Since the images to classify can be very big, we will use a

streamed writer which will trigger the streaming ability of the classifier.

typedef otb::ImageFileReader<ImageType> ReaderType;

typedef otb::ImageFileWriter<LabeledImageType> WriterType;

We instantiate the classifier and the reader objects and we set their parameters. Please note the call

of the GenerateOutputInformation() method on the reader in order to have available the

information about the input image (size, number of bands, etc.) without needing to actually read the

image.

ClassificationFilterType::Pointer filter = ClassificationFilterType::New();

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(infname);

reader->GenerateOutputInformation();

The classifier needs as input the centroids of the classes. We declare the parameter vector, and we read the

centroids from the arguments of the program.

const unsigned int sampleSize = ClassificationFilterType::MaxSampleDimension;

const unsigned int parameterSize = nbClasses ⋆ sampleSize; KMeansParametersType parameters;

parameters.SetSize(parameterSize); parameters.Fill(0); for (unsigned int i = 0; i < nbClasses; ++i)

{ for (unsigned int j = 0; j < reader->GetOutput()->GetNumberOfComponentsPerPixel(); ++j)

{ parameters[i ⋆ sampleSize + j] = atof(argv[4 + i ⋆

reader->GetOutput()->GetNumberOfComponentsPerPixel() + j]);

} } std::cout << "Parameters: " << parameters << std::endl;

We set the parameters for the classifier, we plug the pipeline and trigger its execution by updating the output

of the writer.

filter->SetCentroids(parameters); filter->SetInput(reader->GetOutput());

WriterType::Pointer writer = WriterType::New(); writer->SetInput(filter->GetOutput());

writer->SetFileName(outfname); writer->Update();

The source code for this example can be found in the file

Examples/Classification/KdTreeBasedKMeansClustering.cxx.

K-means clustering is a popular clustering algorithm because it is simple and usually converges to a

reasonable solution. The k-means algorithm works as follows:

- Obtains the initial k means input from the user.

- Assigns each measurement vector in a sample container to its closest mean among the k

number of means (i.e., update the membership of each measurement vectors to the nearest of

the k clusters).

- Calculates each cluster’s mean from the newly assigned measurement vectors (updates the

centroid (mean) of k clusters).

- Repeats step 2 and step 3 until it meets the termination criteria.

The most common termination criteria is that if there is no measurement vector that changes its cluster

membership from the previous iteration, then the algorithm stops.

The itk::Statistics::KdTreeBasedKmeansEstimator is a variation of this logic. The k-means

clustering algorithm is computationally very expensive because it has to recalculate the mean at each

iteration. To update the mean values, we have to calculate the distance between k means and each and every

measurement vector. To reduce the computational burden, the KdTreeBasedKmeansEstimator uses a special

data structure: the k-d tree ( itk::Statistics::KdTree ) with additional information. The additional

information includes the number and the vector sum of measurement vectors under each node under the tree

architecture.

With such additional information and the k-d tree data structure, we can reduce the computational cost of

the distance calculation and means. Instead of calculating each measurement vectors and k means, we can

simply compare each node of the k-d tree and the k means. This idea of utilizing a k-d tree can be found in

multiple articles [5] [104] [78]. Our implementation of this scheme follows the article by the Kanungo et al

[78].

We use the itk::Statistics::ListSample as the input sample, the itk::Vector as the

measurement vector. The following code snippet includes their header files.

#include "itkVector.h" #include "itkListSample.h"

Since this k-means algorithm requires a itk::Statistics::KdTree object as an input, we include the

KdTree class header file. As mentioned above, we need a k-d tree with the vector sum and the number of

measurement vectors. Therefore we use the itk::Statistics::WeightedCentroidKdTreeGenerator

instead of the itk::Statistics::KdTreeGenerator that generate a k-d tree without such additional

information.

#include "itkKdTree.h" #include "itkWeightedCentroidKdTreeGenerator.h"

The KdTreeBasedKmeansEstimator class is the implementation of the k-means algorithm. It does not create

k clusters. Instead, it returns the mean estimates for the k clusters.

#include "itkKdTreeBasedKmeansEstimator.h"

To generate the clusters, we must create k instances of itk::Statistics::EuclideanDistanceMetric

function as the membership functions for each cluster and plug that—along with a sample—into an

itk::Statistics::SampleClassifierFilter object to get a itk::Statistics::MembershipSample

that stores pairs of measurement vectors and their associated class labels (k labels).

#include "itkMinimumDecisionRule.h" #include "itkSampleClassifierFilter.h"

We will fill the sample with random variables from two normal distribution using the

itk::Statistics::NormalVariateGenerator .

#include "itkNormalVariateGenerator.h"

Since the NormalVariateGenerator class only supports 1-D, we define our measurement

vector type as one component vector. We then, create a ListSample object for data inputs. Each

measurement vector is of length 1. We set this using the SetMeasurementVectorSize() method.

typedef itk::Vector<double, 1> MeasurementVectorType;

typedef itk::Statistics::ListSample<MeasurementVectorType> SampleType;

SampleType::Pointer sample = SampleType::New(); sample->SetMeasurementVectorSize(1);

The following code snippet creates a NormalVariateGenerator object. Since the random variable generator

returns values according to the standard normal distribution (The mean is zero, and the standard deviation is

one), before pushing random values into the sample, we change the mean and standard deviation.

We want two normal (Gaussian) distribution data. We have two for loops. Each for loop uses

different mean and standard deviation. Before we fill the sample with the second distribution data,

we call Initialize(random seed) method, to recreate the pool of random variables in the

normalGenerator.

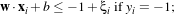

To see the probability density plots from the two distribution, refer to the Figure 19.2.

typedef itk::Statistics::NormalVariateGenerator NormalGeneratorType;

NormalGeneratorType::Pointer normalGenerator = NormalGeneratorType::New();

normalGenerator->Initialize(101); MeasurementVectorType mv; double mean = 100;

double standardDeviation = 30; for (unsigned int i = 0; i < 100; ++i)

{ mv[0] = (normalGenerator->GetVariate() ⋆ standardDeviation) + mean;

sample->PushBack(mv); } normalGenerator->Initialize(3024); mean = 200;

standardDeviation = 30; for (unsigned int i = 0; i < 100; ++i) {

mv[0] = (normalGenerator->GetVariate() ⋆ standardDeviation) + mean; sample->PushBack(mv); }

We create a k-d tree.

typedef itk::Statistics::WeightedCentroidKdTreeGenerator<SampleType> TreeGeneratorType;

TreeGeneratorType::Pointer treeGenerator = TreeGeneratorType::New();

treeGenerator->SetSample(sample); treeGenerator->SetBucketSize(16); treeGenerator->Update();

Once we have the k-d tree, it is a simple procedure to produce k mean estimates.

We create the KdTreeBasedKmeansEstimator. Then, we provide the initial mean values using the

SetParameters(). Since we are dealing with two normal distribution in a 1-D space, the size of the mean

value array is two. The first element is the first mean value, and the second is the second mean value. If we

used two normal distributions in a 2-D space, the size of array would be four, and the first two elements

would be the two components of the first normal distribution’s mean vector. We plug-in the k-d tree using

the SetKdTree().

The remaining two methods specify the termination condition. The estimation process stops when the

number of iterations reaches the maximum iteration value set by the SetMaximumIteration(), or the

distances between the newly calculated mean (centroid) values and previous ones are within the

threshold set by the SetCentroidPositionChangesThreshold(). The final step is to call the

StartOptimization() method.

The for loop will print out the mean estimates from the estimation process.

typedef TreeGeneratorType::KdTreeType TreeType;

typedef itk::Statistics::KdTreeBasedKmeansEstimator<TreeType> EstimatorType;

EstimatorType::Pointer estimator = EstimatorType::New(); EstimatorType::ParametersType initialMeans(2);

initialMeans[0] = 0.0; initialMeans[1] = 0.0; estimator->SetParameters(initialMeans);

estimator->SetKdTree(treeGenerator->GetOutput()); estimator->SetMaximumIteration(200);

estimator->SetCentroidPositionChangesThreshold(0.0); estimator->StartOptimization();

EstimatorType::ParametersType estimatedMeans = estimator->GetParameters();

for (unsigned int i = 0; i < 2; ++i) { std::cout << "cluster[" << i << "] " << std::endl;

std::cout << " estimated mean : " << estimatedMeans[i] << std::endl; }

If we are only interested in finding the mean estimates, we might stop. However, to illustrate how a

classifier can be formed using the statistical classification framework. We go a little bit further in this

example.

Since the k-means algorithm is an minimum distance classifier using the estimated k means and the

measurement vectors. We use the EuclideanDistanceMetric class as membership functions.

Our choice for the decision rule is the itk::Statistics::MinimumDecisionRule that

returns the index of the membership functions that have the smallest value for a measurement

vector.

After creating a SampleClassifierFilter object and a MinimumDecisionRule object, we plug-in the

decisionRule and the sample to the classifier. Then, we must specify the number of classes that will be

considered using the SetNumberOfClasses() method.

The remainder of the following code snippet shows how to use user-specified class labels. The classification

result will be stored in a MembershipSample object, and for each measurement vector, its class label will be

one of the two class labels, 100 and 200 (unsigned int).

typedef itk::Statistics::DistanceToCentroidMembershipFunction< MeasurementVectorType > MembershipFunctionType;

typedef itk::Statistics::EuclideanDistanceMetric< MeasurementVectorType > DistanceMetricType;

typedef itk::Statistics::MinimumDecisionRule DecisionRuleType;

DecisionRuleType::Pointer decisionRule = DecisionRuleType::New();

typedef itk::Statistics::SampleClassifierFilter<SampleType> ClassifierType;

ClassifierType::Pointer classifier = ClassifierType::New(); classifier->SetDecisionRule(decisionRule);

classifier->SetInput(sample); classifier->SetNumberOfClasses(2);

typedef ClassifierType::ClassLabelVectorObjectType ClassLabelVectorObjectType;

ClassLabelVectorObjectType::Pointer classLabels = ClassLabelVectorObjectType::New();

classLabels->Get().push_back(100); classLabels->Get().push_back(200);

classifier->SetClassLabels(classLabels);

The classifier is almost ready to do the classification process except that it needs two membership

functions that represents two clusters respectively.

In this example, the two clusters are modeled by two Euclidean distance functions. The distance function

(model) has only one parameter, its mean (centroid) set by the SetOrigin() method. To plug-in two

distance functions, we call the AddMembershipFunction() method. Then invocation of the Update()

method will perform the classification.

typedef ClassifierType::MembershipFunctionVectorObjectType MembershipFunctionVectorObjectType;

MembershipFunctionVectorObjectType::Pointer membershipFunctions =

MembershipFunctionVectorObjectType::New();

// std::vector<MembershipFunctionType::Pointer> membershipFunctions;

DistanceMetricType::OriginType origin( sample->GetMeasurementVectorSize());

int index = 0; for (unsigned int i = 0; i < 2; ++i) {

MembershipFunctionType::Pointer membershipFunction1 = MembershipFunctionType::New();

for (unsigned int j = 0; j < sample->GetMeasurementVectorSize(); ++j)

{ origin[j] = estimatedMeans[index++]; }

DistanceMetricType::Pointer distanceMetric = DistanceMetricType::New();

distanceMetric->SetOrigin(origin); membershipFunction1->SetDistanceMetric( distanceMetric );

// classifier->AddMembershipFunction(membershipFunctions[i].GetPointer());

membershipFunctions->Get().push_back(membershipFunction1.GetPointer() ); }

classifier->SetMembershipFunctions(membershipFunctions); classifier->Update();

The following code snippet prints out the measurement vectors and their class labels in the

sample.

const ClassifierType::MembershipSampleType⋆ membershipSample = classifier->GetOutput();

ClassifierType::MembershipSampleType::ConstIterator iter = membershipSample->Begin();

while (iter != membershipSample->End()) { std::cout << "measurement vector = " << iter.GetMeasurementVector()

<< "class label = " << iter.GetClassLabel() << std::endl; ++iter; }

The Self Organizing Map, SOM, introduced by Kohonen is a non-supervised neural learning algorithm. The

map is composed of neighboring cells which are in competition by means of mutual interactions and they

adapt in order to match characteristic patterns of the examples given during the learning. The SOM is

usually on a plane (2D).

The algorithm implements a nonlinear projection from a high dimensional feature space to a lower

dimension space, usually 2D. It is able to find the correspondence between a set of structured data and a

network of much lower dimension while keeping the topological relationships existing in the

feature space. Thanks to this topological organization, the final map presents clusters and their

relationships.

Kohonen’s SOM is usually represented as an array of cells where each cell is, i, associated to a feature (or

weight) vector mi = ![[mi1,mi2,⋅⋅⋅,min]](SoftwareGuide358x.png) T ∈ℝn (figure 19.3).

T ∈ℝn (figure 19.3).

A cell (or neuron) in the map is a good detector for a given input vector x = ![[x1,x2,⋅⋅⋅,xn]](SoftwareGuide360x.png) T ∈ℝn

if the latter is close to the former. This distance between vectors can be represented by the

scalar product xT ⋅mi, but for most of the cases other distances can be used, as for instance

the Euclidean one. The cell having the weight vector closest to the input vector is called the

winner.

T ∈ℝn

if the latter is close to the former. This distance between vectors can be represented by the

scalar product xT ⋅mi, but for most of the cases other distances can be used, as for instance

the Euclidean one. The cell having the weight vector closest to the input vector is called the

winner.

The goal of the learning step is to get a map which is representative of an input example set. It is an iterative

procedure which consists in passing each input example to the map, testing the response of each neuron and

modifying the map to get it closer to the examples.

Algorithm 1 SOM learning:

- t = 0.

- Initialize the weight vectors of the map (randomly, for instance).

- While t < number of iterations, do:

- k = 0.

- While k < number of examples, do:

- Find the vector mi(t) which minimizes the distance d(xk,mi(t))

- For a neighborhood Nc(t) around the winner cell, apply the transformation:

![mi(t+ 1)= mi(t)+ β(t)[xk(t)- mi(t)]](SoftwareGuide361x.png) | (19.2) |

- k = k+1

- t = t +1.

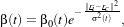

In 19.2, β(t) is a decreasing function with the geometrical distance to the winner cell. For instance:

| (19.3) |

with β0(t) and σ(t) decreasing functions with time and r the cell coordinates in the output – map –

space.

Therefore the algorithm consists in getting the map closer to the learning set. The use of a neighborhood

around the winner cell allows the organization of the map into areas which specialize in the recognition of

different patterns. This neighborhood also ensures that cells which are topologically close are also close in

terms of the distance defined in the feature space.

The source code for this example can be found in the file

Examples/Learning/SOMExample.cxx.

This example illustrates the use of the otb::SOM class for building Kohonen’s Self Organizing

Maps.

We will use the SOM in order to build a color table from an input image. Our input image is coded with

3×8 bits and we would like to code it with only 16 levels. We will use the SOM in order to learn which are

the 16 most representative RGB values of the input image and we will assume that this is the optimal color

table for the image.

The first thing to do is include the header file for the class. We will also need the header files for

the map itself and the activation map builder whose utility will be explained at the end of the

example.

#include "otbSOMMap.h" #include "otbSOM.h" #include "otbSOMActivationBuilder.h"

Since the otb::SOM class uses a distance, we will need to include the header file for the one we want to

use

The Self Organizing Map itself is actually an N-dimensional image where each pixel contains a neuron. In

our case, we decide to build a 2-dimensional SOM, where the neurons store RGB values with floating point

precision.

const unsigned int Dimension = 2; typedef double PixelType;

typedef otb::VectorImage<PixelType, Dimension> ImageType;

typedef ImageType::PixelType VectorType;

The distance that we want to apply between the RGB values is the Euclidean one. Of course we

could choose to use other type of distance, as for instance, a distance defined in any other color

space.

typedef itk::Statistics::EuclideanDistanceMetric<VectorType> DistanceType;

We can now define the type for the map. The otb::SOMMap class is templated over the neuron type –

PixelType here –, the distance type and the number of dimensions. Note that the number of dimensions of

the map could be different from the one of the images to be processed.

typedef otb::SOMMap<VectorType, DistanceType, Dimension> MapType;

We are going to perform the learning directly on the pixels of the input image. Therefore, the image type is

defined using the same pixel type as we used for the map. We also define the type for the imge file

reader.

typedef otb::ImageFileReader<ImageType> ReaderType;

Since the otb::SOM class works on lists of samples, it will need to access the input image through an

adaptor. Its type is defined as follows:

typedef itk::Statistics::ListSample<VectorType> SampleListType;

We can now define the type for the SOM, which is templated over the input sample list and the type of the

map to be produced and the two functors that hold the training behavior.

typedef otb::Functor::CzihoSOMLearningBehaviorFunctor LearningBehaviorFunctorType;

typedef otb::Functor::CzihoSOMNeighborhoodBehaviorFunctor

NeighborhoodBehaviorFunctorType; typedef otb::SOM<SampleListType, MapType,

LearningBehaviorFunctorType, NeighborhoodBehaviorFunctorType> SOMType;

As an alternative to standard SOMType, one can decide to use an otb::PeriodicSOM , which behaves like

otb::SOM but is to be considered to as a torus instead of a simple map. Hence, the neighborhood behavior

of the winning neuron does not depend on its location on the map... otb::PeriodicSOM is defined in

otbPeriodicSOM.h.

We can now start building the pipeline. The first step is to instantiate the reader and pass its output to the

adaptor.

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(inputFileName);

reader->Update(); SampleListType::Pointer sampleList = SampleListType::New();

sampleList->SetMeasurementVectorSize(reader->GetOutput()->GetVectorLength());

itk::ImageRegionIterator<ImageType> imgIter (reader->GetOutput(),

reader->GetOutput()->

GetBufferedRegion()); imgIter.GoToBegin();

itk::ImageRegionIterator<ImageType> imgIterEnd (reader->GetOutput(),

reader->GetOutput()->

GetBufferedRegion()); imgIterEnd.GoToEnd(); do

{ sampleList->PushBack(imgIter.Get()); ++imgIter; } while (imgIter != imgIterEnd);

We can now instantiate the SOM algorithm and set the sample list as input.

SOMType::Pointer som = SOMType::New(); som->SetListSample(sampleList);

We use a SOMType::SizeType array in order to set the sizes of the map.

SOMType::SizeType size; size[0] = sizeX; size[1] = sizeY; som->SetMapSize(size);

The initial size of the neighborhood of each neuron is set in the same way.

SOMType::SizeType radius; radius[0] = neighInitX; radius[1] = neighInitY;

som->SetNeighborhoodSizeInit(radius);

The other parameters are the number of iterations, the initial and the final values for the learning rate – β –

and the maximum initial value for the neurons (the map will be randomly initialized).

som->SetNumberOfIterations(nbIterations); som->SetBetaInit(betaInit);

som->SetBetaEnd(betaEnd); som->SetMaxWeight(static_cast<PixelType>(initValue));

Now comes the initialization of the functors.

LearningBehaviorFunctorType learningFunctor; learningFunctor.SetIterationThreshold(radius, nbIterations);

som->SetBetaFunctor(learningFunctor); NeighborhoodBehaviorFunctorType neighborFunctor;

som->SetNeighborhoodSizeFunctor(neighborFunctor); som->Update();

Finally, we set up the las part of the pipeline where the plug the output of the SOM into the writer. The

learning procedure is triggered by calling the Update() method on the writer. Since the map is itself an

image, we can write it to disk with an otb::ImageFileWriter .

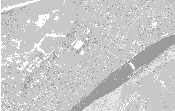

Figure 19.4 shows the result of the SOM learning. Since we have performed a learning on RGB pixel

values, the produced SOM can be interpreted as an optimal color table for the input image. It can be

observed that the obtained colors are topologically organised, so similar colors are also close in the map.

This topological organisation can be exploited to further reduce the number of coding levels of the pixels

without performing a new learning: we can subsample the map to get a new color table. Also, a

bilinear interpolation between the neurons can be used to increase the number of coding levels.

We can now compute the activation map for the input image. The activation map tells us how many times a

given neuron is activated for the set of examples given to the map. The activation map is stored as a scalar

image and an integer pixel type is usually enough.

typedef unsigned char OutputPixelType; typedef otb::Image<OutputPixelType, Dimension> OutputImageType;

typedef otb::ImageFileWriter<OutputImageType> ActivationWriterType;

In a similar way to the otb::SOM class the otb::SOMActivationBuilder is templated

over the sample list given as input, the SOM map type and the activation map to be built as

output.

typedef otb::SOMActivationBuilder<SampleListType, MapType, OutputImageType> SOMActivationBuilderType;

We instantiate the activation map builder and set as input the SOM map build before and the image (using

the adaptor).

SOMActivationBuilderType::Pointer somAct = SOMActivationBuilderType::New();

somAct->SetInput(som->GetOutput()); somAct->SetListSample(sampleList); somAct->Update();

The final step is to write the activation map to a file.

if (actMapFileName != ITK_NULLPTR) { ActivationWriterType::Pointer actWriter = ActivationWriterType::New();

actWriter->SetFileName(actMapFileName);

The righthand side of figure 19.4 shows the activation map obtained.

The source code for this example can be found in the file

Examples/Learning/SOMClassifierExample.cxx.

This example illustrates the use of the otb::SOMClassifier class for performing a classification using

an existing Kohonen’s Self Organizing. Actually, the SOM classification consists only in the attribution of

the winner neuron index to a given feature vector.

We will use the SOM created in section 19.4.2.1 and we will assume that each neuron represents a class in

the image.

The first thing to do is include the header file for the class.

#include "otbSOMClassifier.h"

As for the SOM learning step, we must define the types for the otb::SOMMap, and therefore, also for

the distance to be used. We will also define the type for the SOM reader, which is actually an

otb::ImageFileReader which the appropriate image type.

typedef itk::Statistics::EuclideanDistanceMetric<PixelType> DistanceType;

typedef otb::SOMMap<PixelType, DistanceType, Dimension> SOMMapType;

typedef otb::ImageFileReader<SOMMapType> SOMReaderType;

The classification will be performed by the otb::SOMClassifier , which, as most of the classifiers,

works on itk::Statistics::ListSample s. In order to be able to perform an image classification, we

will need to use the itk::Statistics::ImageToListAdaptor which is templated over the type of

image to be adapted. The SOMClassifier is templated over the sample type, the SOMMap type and the

pixel type for the labels.

typedef itk::Statistics::ListSample<PixelType> SampleType;

typedef otb::SOMClassifier<SampleType, SOMMapType, LabelPixelType> ClassifierType;

The result of the classification will be stored on an image and saved to a file. Therefore, we define the types

needed for this step.

typedef otb::Image<LabelPixelType, Dimension> OutputImageType;

typedef otb::ImageFileWriter<OutputImageType> WriterType;

We can now start reading the input image and the SOM given as inputs to the program. We instantiate the

readers as usual.

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(imageFilename);

reader->Update(); SOMReaderType::Pointer somreader = SOMReaderType::New();

somreader->SetFileName(mapFilename); somreader->Update();

The conversion of the input data from image to list sample is easily done using the adaptor.

SampleType::Pointer sample = SampleType::New(); itk::ImageRegionIterator<InputImageType> it(reader->GetOutput(),

reader->GetOutput()->

GetLargestPossibleRegion());

sample->SetMeasurementVectorSize(reader->GetOutput()->GetNumberOfComponentsPerPixel());

it.GoToBegin(); while (!it.IsAtEnd()) { sample->PushBack(it.Get()); ++it; }

The classifier can now be instantiated. The input data is set by using the SetSample() method and the

SOM si set using the SetMap() method. The classification is triggered by using the Update()

method.

ClassifierType::Pointer classifier = ClassifierType::New(); classifier->SetSample(sample.GetPointer());

classifier->SetMap(somreader->GetOutput()); classifier->Update();

Once the classification has been performed, the sample list obtained at the output of the classifier must be

converted into an image. We create the image as follows :

OutputImageType::Pointer outputImage = OutputImageType::New();

outputImage->SetRegions(reader->GetOutput()->GetLargestPossibleRegion()); outputImage->Allocate();

We can now get a pointer to the classification result.

ClassifierType::OutputType⋆ membershipSample = classifier->GetOutput();

And we can declare the iterators pointing to the front and the back of the sample list.

ClassifierType::OutputType::ConstIterator m_iter = membershipSample->Begin();

ClassifierType::OutputType::ConstIterator m_last = membershipSample->End();

We also declare an itk::ImageRegionIterator in order to fill the output image with the class

labels.

typedef itk::ImageRegionIterator<OutputImageType> OutputIteratorType;

OutputIteratorType outIt(outputImage, outputImage->GetLargestPossibleRegion());

We iterate through the sample list and the output image and assign the label values to the image

pixels.

outIt.GoToBegin(); while (m_iter != m_last && !outIt.IsAtEnd()) {

outIt.Set(m_iter.GetClassLabel()); ++m_iter; ++outIt; }

Finally, we write the classified image to a file.

WriterType::Pointer writer = WriterType::New(); writer->SetFileName(outputFilename);

writer->SetInput(outputImage); writer->Update();

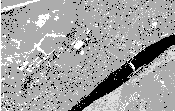

Figure 19.5 shows the result of the SOM classification.

The source code for this example can be found in the file

Examples/Classification/SOMImageClassificationExample.cxx.

In previous examples, we have used the otb::SOMClassifier , which uses the ITK classification

framework. This good for compatibility with the ITK framework, but introduces the limitations

of not being able to use streaming and being able to know at compilation time the number of

bands of the image to be classified. In OTB we have avoided this limitation by developing the

otb::SOMImageClassificationFilter . In this example we will illustrate its use. We start by including

the appropriate header file.

#include "otbSOMImageClassificationFilter.h"

We will assume double precision input images and will also define the type for the labeled

pixels.

const unsigned int Dimension = 2; typedef double PixelType;

typedef unsigned short LabeledPixelType;

Our classifier will be generic enough to be able to process images with any number of bands. We read the

images as otb::VectorImage s. The labeled image will be a scalar image.

typedef otb::VectorImage<PixelType, Dimension> ImageType;

typedef otb::Image<LabeledPixelType, Dimension> LabeledImageType;

We can now define the type for the classifier filter, which is templated over its input and output image types

and the SOM type.

typedef otb::SOMMap<ImageType::PixelType> SOMMapType; typedef otb::SOMImageClassificationFilter<ImageType,

LabeledImageType, SOMMapType> ClassificationFilterType;

And finally, we define the readers (for the input image and theSOM) and the writer. Since the images, to

classify can be very big, we will use a streamed writer which will trigger the streaming ability of the

classifier.

typedef otb::ImageFileReader<ImageType> ReaderType;

typedef otb::ImageFileReader<SOMMapType> SOMReaderType;

typedef otb::ImageFileWriter<LabeledImageType> WriterType;

We instantiate the classifier and the reader objects and we set the existing SOM obtained in a previous

training step.

ClassificationFilterType::Pointer filter = ClassificationFilterType::New();

ReaderType::Pointer reader = ReaderType::New(); reader->SetFileName(infname);

SOMReaderType::Pointer somreader = SOMReaderType::New(); somreader->SetFileName(somfname);

somreader->Update(); filter->SetMap(somreader->GetOutput());

We plug the pipeline and trigger its execution by updating the output of the writer.

filter->SetInput(reader->GetOutput()); WriterType::Pointer writer = WriterType::New();

writer->SetInput(filter->GetOutput()); writer->SetFileName(outfname); writer->Update();

The source code for this example can be found in the file

Examples/Classification/BayesianPluginClassifier.cxx.

In this example, we present a system that places measurement vectors into two Gaussian classes. The

Figure 19.6 shows all the components of the classifier system and the data flow. This system differs from

the previous k-means clustering algorithms in several ways. The biggest difference is that this classifier

uses the itk::Statistics::GaussianMembershipFunction as membership functions

instead of the itk::Statistics::EuclideanDistanceMetric . Since the membership

function is different, it requires a different set of parameters, mean vectors and covariance

matrices. We choose the itk::Statistics::MeanSampleFilter (sample mean) and the

itk::Statistics::CovarianceSampleFilter (sample covariance) for the estimation algorithms of the

two parameters. If we want a more robust estimation algorithm, we can replace these estimation

algorithms with additional alternatives without changing other components in the classifier

system.

It is a bad idea to use the same sample for both testing and training (parameter estimation) of the

parameters. However, for simplicity, in this example, we use a sample for test and training.

We use the itk::Statistics::ListSample as the sample (test and training). The itk::Vector is our

measurement vector class. To store measurement vectors into two separate sample containers, we use the

itk::Statistics::Subsample objects.

#include "itkVector.h" #include "itkListSample.h" #include "itkSubsample.h"

The following two files provides us with the parameter estimation algorithms.

#include "itkMeanSampleFilter.h" #include "itkCovarianceSampleFilter.h"

The following files define the components required by ITK statistical classification framework: the decision

rule, the membership function, and the classifier.

#include "itkMaximumRatioDecisionRule.h" #include "itkGaussianMembershipFunction.h"

#include "itkSampleClassifierFilter.h"

We will fill the sample with random variables from two normal distributions using the

itk::Statistics::NormalVariateGenerator .

#include "itkNormalVariateGenerator.h"

Since the NormalVariateGenerator class only supports 1-D, we define our measurement vector type as a one

component vector. We then, create a ListSample object for data inputs.

We also create two Subsample objects that will store the measurement vectors in sample into two separate

sample containers. Each Subsample object stores only the measurement vectors belonging to a single class.

This class sample will be used by the parameter estimation algorithms.

typedef itk::Vector<double, 1> MeasurementVectorType;

typedef itk::Statistics::ListSample<MeasurementVectorType> SampleType;

SampleType::Pointer sample = SampleType::New();

sample->SetMeasurementVectorSize(1); // length of measurement vectors

// in the sample. typedef itk::Statistics::Subsample<SampleType> ClassSampleType;

std::vector<ClassSampleType::Pointer> classSamples; for (unsigned int i = 0; i < 2; ++i) {

classSamples.push_back(ClassSampleType::New()); classSamples[i]->SetSample(sample); }

The following code snippet creates a NormalVariateGenerator object. Since the random variable generator

returns values according to the standard normal distribution (the mean is zero, and the standard deviation is

one), before pushing random values into the sample, we change the mean and standard deviation. We need

two normally (Gaussian) distributed datasets. We have two for loops, within which each uses a different

mean and standard deviation. Before we fill the sample with the second distribution data, we call

Initialize(random seed) method, to recreate the pool of random variables in the normalGenerator. In

the second for loop, we fill the two class samples with measurement vectors using the AddInstance()

method.

To see the probability density plots from the two distributions, refer to Figure 19.2.

typedef itk::Statistics::NormalVariateGenerator NormalGeneratorType;

NormalGeneratorType::Pointer normalGenerator = NormalGeneratorType::New();

normalGenerator->Initialize(101); MeasurementVectorType mv;

double mean = 100; double standardDeviation = 30;

SampleType::InstanceIdentifier id = 0UL; for (unsigned int i = 0; i < 100; ++i) {

mv.Fill((normalGenerator->GetVariate() ⋆ standardDeviation) + mean); sample->PushBack(mv);

classSamples[0]->AddInstance(id); ++id; } normalGenerator->Initialize(3024);

mean = 200; standardDeviation = 30; for (unsigned int i = 0; i < 100; ++i)

{ mv.Fill((normalGenerator->GetVariate() ⋆ standardDeviation) + mean);

sample->PushBack(mv); classSamples[1]->AddInstance(id); ++id; }

In the following code snippet, notice that the template argument for the MeanSampleFilter and

CovarianceFilter is ClassSampleType (i.e., type of Subsample) instead of SampleType (i.e. type

of ListSample). This is because the parameter estimation algorithms are applied to the class

sample.

typedef itk::Statistics::MeanSampleFilter<ClassSampleType> MeanEstimatorType;

typedef itk::Statistics::CovarianceSampleFilter<ClassSampleType>

CovarianceEstimatorType; std::vector<MeanEstimatorType::Pointer> meanEstimators;

std::vector<CovarianceEstimatorType::Pointer> covarianceEstimators; for (unsigned int i = 0; i < 2; ++i)

{ meanEstimators.push_back(MeanEstimatorType::New()); meanEstimators[i]->SetInput(classSamples[i]);

meanEstimators[i]->Update(); covarianceEstimators.push_back(CovarianceEstimatorType::New());

covarianceEstimators[i]->SetInput(classSamples[i]);

//covarianceEstimators[i]->SetMean(meanEstimators[i]->GetOutput());

covarianceEstimators[i]->Update(); }

We print out the estimated parameters.

for (unsigned int i = 0; i < 2; ++i) {

std::cout << "class[" << i << "] " << std::endl; std::cout << " estimated mean : "

<< meanEstimators[i]->GetMean() << " covariance matrix : "

<< covarianceEstimators[i]->GetCovarianceMatrixOutput()->Get() << std::endl; }

After creating a SampleClassifierFilter object and a MaximumRatioDecisionRule object, we plug in the

decisionRule and the sample to the classifier. We then specify the number of classes that will be

considered using the SetNumberOfClasses() method.

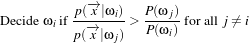

The MaximumRatioDecisionRule requires a vector of a priori probability values. Such a priori probability

will be the P(ωi) of the following variation of the Bayes decision rule:

| (19.4) |

The remainder of the code snippet demonstrates how user-specified class labels are used. The classification

result will be stored in a MembershipSample object, and for each measurement vector, its class label will be

one of the two class labels, 100 and 200 (unsigned int).

typedef itk::Statistics::GaussianMembershipFunction <MeasurementVectorType> MembershipFunctionType;

typedef itk::Statistics::MaximumRatioDecisionRule DecisionRuleType;

DecisionRuleType::Pointer decisionRule = DecisionRuleType::New();

DecisionRuleType::PriorProbabilityVectorType aPrioris; aPrioris.push_back(classSamples[0]->GetTotalFrequency()

/ sample->GetTotalFrequency()); aPrioris.push_back(classSamples[1]->GetTotalFrequency()

/ sample->GetTotalFrequency()); decisionRule->SetPriorProbabilities(aPrioris);

typedef itk::Statistics::SampleClassifierFilter<SampleType> ClassifierType;

ClassifierType::Pointer classifier = ClassifierType::New();

classifier->SetDecisionRule(dynamic_cast< itk::Statistics::DecisionRule⋆ >( decisionRule.GetPointer()));

classifier->SetInput(sample); classifier->SetNumberOfClasses(2); std::vector<long unsigned int> classLabels;

classLabels.resize(2); classLabels[0] = 100; classLabels[1] = 200;

typedef itk::SimpleDataObjectDecorator<std::vector<long unsigned int> > ClassLabelDecoratedType;

ClassLabelDecoratedType::Pointer classLabelsDecorated = ClassLabelDecoratedType::New();

classLabelsDecorated->Set(classLabels); classifier->SetClassLabels(classLabelsDecorated);

The classifier is almost ready to perform the classification except that it needs two membership

functions that represent the two clusters.

In this example, we can imagine that the two clusters are modeled by two Euclidean distance functions. The

distance function (model) has only one parameter, the mean (centroid) set by the SetOrigin() method. To

plug-in two distance functions, we call the AddMembershipFunction() method. Finally, the invocation of

the Update() method will perform the classification.

typedef ClassifierType::MembershipFunctionType MembershipFunctionBaseType;

typedef ClassifierType::MembershipFunctionVectorObjectType::ComponentType ComponentMembershipType;

// Vector Containing the membership function used

ComponentMembershipType membershipFunctions; for (unsigned int i = 0; i < 2; i++)